column status format a10

set feedback off

set serveroutput on

select username, sid, serial#, process, status

from v$session

where username is not null

/

column username format a20

column sql_text format a55 word_wrapped

set serveroutput on size 1000000

declare

x number;

begin

for x in

( select username||'('||sid||','||serial#||

') ospid = ' || process ||

' program = ' || program username,

to_char(LOGON_TIME,' Day HH24:MI') logon_time,

to_char(sysdate,' Day HH24:MI') current_time,

sql_address, LAST_CALL_ET

from v$session

where status = 'ACTIVE'

and rawtohex(sql_address) <> '00'

and username is not null order by last_call_et )

loop

for y in ( select max(decode(piece,0,sql_text,null)) ||

max(decode(piece,1,sql_text,null)) ||

max(decode(piece,2,sql_text,null)) ||

max(decode(piece,3,sql_text,null))

sql_text

from v$sqltext_with_newlines

where address = x.sql_address

and piece < 4)

loop

if ( y.sql_text not like '%listener.get_cmd%' and

y.sql_text not like '%RAWTOHEX(SQL_ADDRESS)%')

then

dbms_output.put_line( '--------------------' );

dbms_output.put_line( x.username );

dbms_output.put_line( x.logon_time || ' ' ||

x.current_time||

' last et = ' ||

x.LAST_CALL_ET);

dbms_output.put_line(

substr( y.sql_text, 1, 250 ) );

end if;

end loop;

end loop;

end;

Tuesday, October 31, 2006

Tuesday, October 24, 2006

9.2.0.6=>9.2.0.7 upgrade on windows platform

Error occurs when progress goes to 51%.

To fix this error, stop the service "Distributed Transaction coordinator".

To fix this error, stop the service "Distributed Transaction coordinator".

Sunday, October 22, 2006

How to obtain the number of the blocks that really contain data

実際にデータを含んでいるデータブロック数を調べる方法はANALYZE TABLEがよく使用されますが、これよりも早い方法があります。

実際に全件検索を行って数えてしまう方法です。

以下のSQLで実現できます。

oracle7.X

select count(distinct(substr(rowid,1,8)||substr(rowid,15,4))) blocks_used from [テーブル名];

oracle8.X

select count(distinct(substr(rowid,1,15))) blocks_used from [テーブル名];

これにより、実データサイズを算出することが出来ます。

実データ=上記SELECT文のblock数 * ブロックサイズ(初期化パラメータdb_block_size)ただし、PCTFREE/PCTUSEDなどの設定や断片化によるオーバヘッドがありえるため、完全なデータサイズではありません。

あくまでも実際にデータを含んでいるデータブロックを計算しています。

実際に全件検索を行って数えてしまう方法です。

以下のSQLで実現できます。

oracle7.X

select count(distinct(substr(rowid,1,8)||substr(rowid,15,4))) blocks_used from [テーブル名];

oracle8.X

select count(distinct(substr(rowid,1,15))) blocks_used from [テーブル名];

これにより、実データサイズを算出することが出来ます。

実データ=上記SELECT文のblock数 * ブロックサイズ(初期化パラメータdb_block_size)ただし、PCTFREE/PCTUSEDなどの設定や断片化によるオーバヘッドがありえるため、完全なデータサイズではありません。

あくまでも実際にデータを含んでいるデータブロックを計算しています。

Thursday, October 19, 2006

How to change password back

SQL> SELECT PASSWORD FROM DBA_USERS WHERE USERNAME = 'test';

PASSWORD

------------------------------

F84870711C9D9E90

SQL> ALTER USER test IDENTIFIED BY ABC;

用户已更改。

SQL> ALTER USER DAIJC IDENTIFIED BY VALUES 'F84870711C9D9E90';

用户已更改。

PASSWORD

------------------------------

F84870711C9D9E90

SQL> ALTER USER test IDENTIFIED BY ABC;

用户已更改。

SQL> ALTER USER DAIJC IDENTIFIED BY VALUES 'F84870711C9D9E90';

用户已更改。

tablespace usage

select t.*

from (SELECT D.TABLESPACE_NAME,

SPACE "SUM_SPACE(M)",

BLOCKS SUM_BLOCKS,

SPACE - NVL(FREE_SPACE, 0) "USED_SPACE(M)",

ROUND((1 - NVL(FREE_SPACE, 0) / SPACE) * 100, 2) "USED_RATE(%)",

FREE_SPACE "FREE_SPACE(M)"

FROM (SELECT TABLESPACE_NAME,

ROUND(SUM(BYTES) / (1024 * 1024), 2) SPACE,

SUM(BLOCKS) BLOCKS

FROM DBA_DATA_FILES

GROUP BY TABLESPACE_NAME) D,

(SELECT TABLESPACE_NAME,

ROUND(SUM(BYTES) / (1024 * 1024), 2) FREE_SPACE

FROM DBA_FREE_SPACE

GROUP BY TABLESPACE_NAME) F

WHERE D.TABLESPACE_NAME = F.TABLESPACE_NAME(+)

UNION ALL --if have tempfile

SELECT D.TABLESPACE_NAME,

SPACE "SUM_SPACE(M)",

BLOCKS SUM_BLOCKS,

USED_SPACE "USED_SPACE(M)",

ROUND(NVL(USED_SPACE, 0) / SPACE * 100, 2) "USED_RATE(%)",

SPACE - USED_SPACE "FREE_SPACE(M)"

FROM (SELECT TABLESPACE_NAME,

ROUND(SUM(BYTES) / (1024 * 1024), 2) SPACE,

SUM(BLOCKS) BLOCKS

FROM DBA_TEMP_FILES

GROUP BY TABLESPACE_NAME) D,

(SELECT TABLESPACE,

ROUND(SUM(BLOCKS * 8192) / (1024 * 1024), 2) USED_SPACE

FROM V$SORT_USAGE

GROUP BY TABLESPACE) F

WHERE D.TABLESPACE_NAME = F.TABLESPACE(+)) t

order by "USED_RATE(%)" desc;

from (SELECT D.TABLESPACE_NAME,

SPACE "SUM_SPACE(M)",

BLOCKS SUM_BLOCKS,

SPACE - NVL(FREE_SPACE, 0) "USED_SPACE(M)",

ROUND((1 - NVL(FREE_SPACE, 0) / SPACE) * 100, 2) "USED_RATE(%)",

FREE_SPACE "FREE_SPACE(M)"

FROM (SELECT TABLESPACE_NAME,

ROUND(SUM(BYTES) / (1024 * 1024), 2) SPACE,

SUM(BLOCKS) BLOCKS

FROM DBA_DATA_FILES

GROUP BY TABLESPACE_NAME) D,

(SELECT TABLESPACE_NAME,

ROUND(SUM(BYTES) / (1024 * 1024), 2) FREE_SPACE

FROM DBA_FREE_SPACE

GROUP BY TABLESPACE_NAME) F

WHERE D.TABLESPACE_NAME = F.TABLESPACE_NAME(+)

UNION ALL --if have tempfile

SELECT D.TABLESPACE_NAME,

SPACE "SUM_SPACE(M)",

BLOCKS SUM_BLOCKS,

USED_SPACE "USED_SPACE(M)",

ROUND(NVL(USED_SPACE, 0) / SPACE * 100, 2) "USED_RATE(%)",

SPACE - USED_SPACE "FREE_SPACE(M)"

FROM (SELECT TABLESPACE_NAME,

ROUND(SUM(BYTES) / (1024 * 1024), 2) SPACE,

SUM(BLOCKS) BLOCKS

FROM DBA_TEMP_FILES

GROUP BY TABLESPACE_NAME) D,

(SELECT TABLESPACE,

ROUND(SUM(BLOCKS * 8192) / (1024 * 1024), 2) USED_SPACE

FROM V$SORT_USAGE

GROUP BY TABLESPACE) F

WHERE D.TABLESPACE_NAME = F.TABLESPACE(+)) t

order by "USED_RATE(%)" desc;

Wednesday, October 18, 2006

Incrementally Updated Backups: A Basic Example

To create incremental backups for use in an incrementally updated backups strategy, you must use the BACKUP... FOR RECOVER OF COPY WITH TAG form of the BACKUP command. How the command works is best understood in the context of an example script that would implement the strategy.

This script, run on a regular basis, is all that is required to implement a strategy based on incrementally updated backups:

RUN {

RECOVER COPY OF DATABASE WITH TAG 'incr_update';

BACKUP INCREMENTAL LEVEL 1 FOR RECOVER OF COPY WITH TAG 'incr_update' DATABASE;

}

The syntax used in the script does not, however, make it clear how the strategy works. To understand the script and the strategy, it is necessary to understand the effects of these two commands when no datafile copies or incremental backups exist.

The RECOVER COPY OF DATABASE WITH TAG... command causes RMAN to apply any available incremental level 1 backups to a set of datafile copies with the specified tag.

If there is no incremental backup or no datafile copy, the command generates a message but does not generate an error.

The first time the script runs, this command has no effect, because there is neither a datafile copy nor a level 1 incremental backup.

The second time the script runs, there is a datafile copy (created by the first BACKUP command), but no incremental level 1 backup, so again, the command has no effect.

On the third run and all subsequent runs, there is a datafile copy and a level 1 incremental from the previous run, so the level 1 incremental is applied to the datafile copy, bringing the datafile copy up to the checkpoint SCN of the level 1 incremental.

---------------------------------------------------------------------------

如果不想使用这种循环执行的方式,而是希望手动完成:

1.创建lv0的image copy

backup as copy incremental level 0 database

2.创建lv1的backup set

BACKUP INCREMENTAL LEVEL 1 FOR RECOVER OF COPY DATABASE

这样,当需要更新image copy的时候运行:

RECOVER COPY OF DATABASE

(以上3条语句可以加上tag参数)

This script, run on a regular basis, is all that is required to implement a strategy based on incrementally updated backups:

RUN {

RECOVER COPY OF DATABASE WITH TAG 'incr_update';

BACKUP INCREMENTAL LEVEL 1 FOR RECOVER OF COPY WITH TAG 'incr_update' DATABASE;

}

The syntax used in the script does not, however, make it clear how the strategy works. To understand the script and the strategy, it is necessary to understand the effects of these two commands when no datafile copies or incremental backups exist.

The RECOVER COPY OF DATABASE WITH TAG... command causes RMAN to apply any available incremental level 1 backups to a set of datafile copies with the specified tag.

If there is no incremental backup or no datafile copy, the command generates a message but does not generate an error.

The first time the script runs, this command has no effect, because there is neither a datafile copy nor a level 1 incremental backup.

The second time the script runs, there is a datafile copy (created by the first BACKUP command), but no incremental level 1 backup, so again, the command has no effect.

On the third run and all subsequent runs, there is a datafile copy and a level 1 incremental from the previous run, so the level 1 incremental is applied to the datafile copy, bringing the datafile copy up to the checkpoint SCN of the level 1 incremental.

---------------------------------------------------------------------------

如果不想使用这种循环执行的方式,而是希望手动完成:

1.创建lv0的image copy

backup as copy incremental level 0 database

2.创建lv1的backup set

BACKUP INCREMENTAL LEVEL 1 FOR RECOVER OF COPY DATABASE

这样,当需要更新image copy的时候运行:

RECOVER COPY OF DATABASE

(以上3条语句可以加上tag参数)

Tuesday, October 17, 2006

How to manage flash recovery area and restore point

Oracle

oracle 10.2.0.2

Restore point and flash recovery area space management

Definition

-------------

The flash recovery area is an Oracle-managed directory, file system, or Automatic Storage Management disk group that

provides a centralized disk location for backup and recovery files. Oracle creates archived logs in the flash recovery area.

RMAN can store its backups in the flash recovery area, and it uses it when restoring files during media recovery.

Creating a normal restore point assigns the restore point name to a specific point in time or SCN, as a kind of bookmark

or alias you can use with commands that recognize a RESTORE POINT clause as a shorthand for specifying an SCN.

Like normal restore points, guaranteed restore points can be used as aliases for SCNs in recovery operations. However,

they also provide specific functionality related to the use of the Flashback Database feature.

About the Flashback Database Window

---------------------------------------------------------

DB_FLASHBACK_RETENTION_TARGET specifies the upper limit (in minutes) on how far back in time the database may

be flashed back. How far back one can flashback a database depends on how much flashback data Oracle has kept in the

flash recovery area.

The flashback retention target is a target, not an absolute guarantee that Flashback Database will be available.

If your flash recovery area is not large enough to hold both the flashback logs and files that must be retained to meet the

retention policy, such as archived redo logs and other backups, then the flashback logs from the earliest SCNs may be

deleted to make room in the flash recovery area for other files.

When flashback logging is enabled, the earliest SCN in the flashback database window can be determined by querying V$FLASHBACK_DATABASE_LOG.OLDEST_FLASHBACK_SCN and

V$FLASHBACK_DATABASE_LOG.OLDEST_FLASHBACK_TIME as shown in this example:

SELECT OLDEST_FLASHBACK_SCN, OLDEST_FLASHBACK_TIME

FROM V$FLASHBACK_DATABASE_LOG;

Requirements for Using Guaranteed Restore Points

--------------------------------------------------------------------------

The COMPATIBLE initialization parameter must be set to 10.2 or greater.

The database must be running in ARCHIVELOG mode. The FLASHBACK DATABASE operation used to return your

database to a guaranteed restore point requires the use of archived redo logs from around the time of the restore point.

A flash recovery area must be configured, as described in "Setting Up a Flash Recovery Area for RMAN". Guaranteed

restore points use a mechanism similar to flashback logging, and as with flashback logging, Oracle must store the required

logs in the flash recovery area.

If flashback database is not enabled, then the database must be mounted, not open, when creating the first guaranteed

restore point (or if all previously created guaranteed restore points have been dropped).

Note: There are no special requirements for using normal restore points.

Age Out Rules of Restore Points

-----------------------------------------------

Normal restore points eventually age out of the control file, even if not explicitly dropped.

The rules governing retention of restore points in the control file are:

-The most recent 2048 restore points are always kept in the control file, regardless of their age.

-Any restore point more recent than the value of CONTROL_FILE_RECORD_KEEP_TIME is retained,

regardless of how many restore points are defined.

Normal restore points that do not meet either of these conditions may age out of the control file.

Guaranteed restore points never age out of the control file. They remain until they are explicitly dropped.

About Logging for Flashback Database and Guaranteed Restore Points

-------------------------------------------------------------------------------------------------------

If no files are eligible for deletion from the flash recovery area because of the requirements imposed by your retention policy

and the guaranteed restore point, then the database behaves as if it has encountered a disk full condition. In many

circumstances, this causes your database to halt.

・Logging for Guaranteed Restore Points With Flashback Logging Disabled

The available block images can be used to re-create the datafile contents at the time of a guaranteed restore point using

FLASHBACK DATABASE, but you cannot use FLASHBACK DATABASE to reach points in time between the guaranteed

restore points and the current time, as is possible when logging for Flashback Database is enabled. If you need to return

the database to an intermediate point in time, your only option is database point-in-time recovery.

Because each block that changes is only logged once, disk space usage for logging for guaranteed restore points when

flashback logging is disabled is generally considerably less than normal flashback logging. You could maintain a guaranteed

restore point for days or even weeks without concern over the ongoing growth of flashback logs that occurs if logging for

Flashback Database is enabled. The performance overhead of logging for a guaranteed restore point without flashback

database logging is generally lower as well.

・Logging for Flashback Database With Guaranteed Restore Points Defined

If Flashback Database is enabled and one or more guaranteed restore points is defined, then the database performs normal

flashback logging, which causes some performance overhead and, depending upon the pattern of activity on your database,

can cause signifcant space pressure in the flash recovery area. However, unlike normal logging for Flashback Database,

the flash recovery area always retains the flashback logs required to allow FLASHBACK DATABASE to any time as far back

as the earliest currently defined guaranteed restore point. Flashback logs are not deleted in response to space pressure,

if they are required to satisfy the guarantee.

Estimating Disk Space Requirements for Flashback Database Logs

--------------------------------------------------------------------------------------------------

The V$FLASHBACK_DATABASE_LOG view can help you estimate how much space to add to your flash recovery area

for flashback logs. After you have enabled logging for Flashback Database and set a flashback retention target, allow the

database to run under a normal workload for a while, to generate a representative sample of flashback logs. Then run the

following query:

SQL> SELECT ESTIMATED_FLASHBACK_SIZE FROM V$FLASHBACK_DATABASE_LOG

Rules for Retention and Deletion of Flashback Logs

--------------------------------------------------------------------------

The following rules govern the flash recovery area's creation, retention, overwriting and deletion of flashback logs:

A flashback log is created whenever necessary to satisfy the flashback retention target, as long as there is enough space

in the flash recovery area.

A flashback log can be reused, once it is old enough that it is no longer needed to satisfy the flashback retention target.

If the database needs to create a new flashback log and the flash recovery area is full or there is no disk space, then the

oldest flashback log is reused instead.

If the flash recovery area is full, then an archived redo log may be automatically deleted by the flash recovery area to make

space for other files. In such a case, any flashback logs that would require the use of that redo log file for the use of

FLASHBACK DATABASE are also deleted.

Obtain Information, Space Management Rules and How to Resolve Space Pressure

------------------------------------------------------------------------------------------------------------------------

You can query the V$RECOVERY_FILE_DEST view to find out the current location, disk quota, space in use, space

reclaimable by deleting files, and total number of files in the Flash Recovery Area.

SQL> SELECT * FROM V$RECOVERY_FILE_DEST;

Oracle does not delete eligible files from the Flash Recovery Area until the space must be reclaimed for some other purpose.

The following rules apply for files to become eligible for deletion from the Flash Recovery Area:

-Any backups which have become obsolete as per the retention policy.

-Any files in the Flash Recovery Area which has been already backed up to a tertiary device such as tape.

-Flashback logs may be deleted from the Flash Recovery Area to make space available for other required files.

There are a number of choices on how to resolve a full Flash Recovery Area when there are NO files eligible for deletion:

-Make more disk space available, and increase DB_RECOVERY_FILE_DEST_SIZE to reflect the new space.

-Use the command BACKUP RECOVERY AREA, to back up the contents of the Flash Recovery Area to a tertiary

device such as tape.

-Delete unnecessary files from the Flash Recovery Area using the RMAN delete command.

-You may also need to consider changing your backup retention policy

-When using Data Guard, consider changing your archivelog deletion policy.

Reference:

Oracle® Database Backup and Recovery Basics

10g Release 2 (10.2)

Part Number B14192-03

(Data Protection with Restore Points and Flashback Database)

Oracle® Database Reference

10g Release 2 (10.2)

Part Number B14237-02

(DB_FLASHBACK_RETENTION_TARGET)

MetaLink Note:305812.1 Flash Recovery area - Space management Warning & Alerts

MetaLink Note:315098.1 How is the space pressure managed in the Flash Recovery Area - An Example.

oracle 10.2.0.2

Restore point and flash recovery area space management

Definition

-------------

The flash recovery area is an Oracle-managed directory, file system, or Automatic Storage Management disk group that

provides a centralized disk location for backup and recovery files. Oracle creates archived logs in the flash recovery area.

RMAN can store its backups in the flash recovery area, and it uses it when restoring files during media recovery.

Creating a normal restore point assigns the restore point name to a specific point in time or SCN, as a kind of bookmark

or alias you can use with commands that recognize a RESTORE POINT clause as a shorthand for specifying an SCN.

Like normal restore points, guaranteed restore points can be used as aliases for SCNs in recovery operations. However,

they also provide specific functionality related to the use of the Flashback Database feature.

About the Flashback Database Window

---------------------------------------------------------

DB_FLASHBACK_RETENTION_TARGET specifies the upper limit (in minutes) on how far back in time the database may

be flashed back. How far back one can flashback a database depends on how much flashback data Oracle has kept in the

flash recovery area.

The flashback retention target is a target, not an absolute guarantee that Flashback Database will be available.

If your flash recovery area is not large enough to hold both the flashback logs and files that must be retained to meet the

retention policy, such as archived redo logs and other backups, then the flashback logs from the earliest SCNs may be

deleted to make room in the flash recovery area for other files.

When flashback logging is enabled, the earliest SCN in the flashback database window can be determined by querying V$FLASHBACK_DATABASE_LOG.OLDEST_FLASHBACK_SCN and

V$FLASHBACK_DATABASE_LOG.OLDEST_FLASHBACK_TIME as shown in this example:

SELECT OLDEST_FLASHBACK_SCN, OLDEST_FLASHBACK_TIME

FROM V$FLASHBACK_DATABASE_LOG;

Requirements for Using Guaranteed Restore Points

--------------------------------------------------------------------------

The COMPATIBLE initialization parameter must be set to 10.2 or greater.

The database must be running in ARCHIVELOG mode. The FLASHBACK DATABASE operation used to return your

database to a guaranteed restore point requires the use of archived redo logs from around the time of the restore point.

A flash recovery area must be configured, as described in "Setting Up a Flash Recovery Area for RMAN". Guaranteed

restore points use a mechanism similar to flashback logging, and as with flashback logging, Oracle must store the required

logs in the flash recovery area.

If flashback database is not enabled, then the database must be mounted, not open, when creating the first guaranteed

restore point (or if all previously created guaranteed restore points have been dropped).

Note: There are no special requirements for using normal restore points.

Age Out Rules of Restore Points

-----------------------------------------------

Normal restore points eventually age out of the control file, even if not explicitly dropped.

The rules governing retention of restore points in the control file are:

-The most recent 2048 restore points are always kept in the control file, regardless of their age.

-Any restore point more recent than the value of CONTROL_FILE_RECORD_KEEP_TIME is retained,

regardless of how many restore points are defined.

Normal restore points that do not meet either of these conditions may age out of the control file.

Guaranteed restore points never age out of the control file. They remain until they are explicitly dropped.

About Logging for Flashback Database and Guaranteed Restore Points

-------------------------------------------------------------------------------------------------------

If no files are eligible for deletion from the flash recovery area because of the requirements imposed by your retention policy

and the guaranteed restore point, then the database behaves as if it has encountered a disk full condition. In many

circumstances, this causes your database to halt.

・Logging for Guaranteed Restore Points With Flashback Logging Disabled

The available block images can be used to re-create the datafile contents at the time of a guaranteed restore point using

FLASHBACK DATABASE, but you cannot use FLASHBACK DATABASE to reach points in time between the guaranteed

restore points and the current time, as is possible when logging for Flashback Database is enabled. If you need to return

the database to an intermediate point in time, your only option is database point-in-time recovery.

Because each block that changes is only logged once, disk space usage for logging for guaranteed restore points when

flashback logging is disabled is generally considerably less than normal flashback logging. You could maintain a guaranteed

restore point for days or even weeks without concern over the ongoing growth of flashback logs that occurs if logging for

Flashback Database is enabled. The performance overhead of logging for a guaranteed restore point without flashback

database logging is generally lower as well.

・Logging for Flashback Database With Guaranteed Restore Points Defined

If Flashback Database is enabled and one or more guaranteed restore points is defined, then the database performs normal

flashback logging, which causes some performance overhead and, depending upon the pattern of activity on your database,

can cause signifcant space pressure in the flash recovery area. However, unlike normal logging for Flashback Database,

the flash recovery area always retains the flashback logs required to allow FLASHBACK DATABASE to any time as far back

as the earliest currently defined guaranteed restore point. Flashback logs are not deleted in response to space pressure,

if they are required to satisfy the guarantee.

Estimating Disk Space Requirements for Flashback Database Logs

--------------------------------------------------------------------------------------------------

The V$FLASHBACK_DATABASE_LOG view can help you estimate how much space to add to your flash recovery area

for flashback logs. After you have enabled logging for Flashback Database and set a flashback retention target, allow the

database to run under a normal workload for a while, to generate a representative sample of flashback logs. Then run the

following query:

SQL> SELECT ESTIMATED_FLASHBACK_SIZE FROM V$FLASHBACK_DATABASE_LOG

Rules for Retention and Deletion of Flashback Logs

--------------------------------------------------------------------------

The following rules govern the flash recovery area's creation, retention, overwriting and deletion of flashback logs:

A flashback log is created whenever necessary to satisfy the flashback retention target, as long as there is enough space

in the flash recovery area.

A flashback log can be reused, once it is old enough that it is no longer needed to satisfy the flashback retention target.

If the database needs to create a new flashback log and the flash recovery area is full or there is no disk space, then the

oldest flashback log is reused instead.

If the flash recovery area is full, then an archived redo log may be automatically deleted by the flash recovery area to make

space for other files. In such a case, any flashback logs that would require the use of that redo log file for the use of

FLASHBACK DATABASE are also deleted.

Obtain Information, Space Management Rules and How to Resolve Space Pressure

------------------------------------------------------------------------------------------------------------------------

You can query the V$RECOVERY_FILE_DEST view to find out the current location, disk quota, space in use, space

reclaimable by deleting files, and total number of files in the Flash Recovery Area.

SQL> SELECT * FROM V$RECOVERY_FILE_DEST;

Oracle does not delete eligible files from the Flash Recovery Area until the space must be reclaimed for some other purpose.

The following rules apply for files to become eligible for deletion from the Flash Recovery Area:

-Any backups which have become obsolete as per the retention policy.

-Any files in the Flash Recovery Area which has been already backed up to a tertiary device such as tape.

-Flashback logs may be deleted from the Flash Recovery Area to make space available for other required files.

There are a number of choices on how to resolve a full Flash Recovery Area when there are NO files eligible for deletion:

-Make more disk space available, and increase DB_RECOVERY_FILE_DEST_SIZE to reflect the new space.

-Use the command BACKUP RECOVERY AREA, to back up the contents of the Flash Recovery Area to a tertiary

device such as tape.

-Delete unnecessary files from the Flash Recovery Area using the RMAN delete command.

-You may also need to consider changing your backup retention policy

-When using Data Guard, consider changing your archivelog deletion policy.

Reference:

Oracle® Database Backup and Recovery Basics

10g Release 2 (10.2)

Part Number B14192-03

(Data Protection with Restore Points and Flashback Database)

Oracle® Database Reference

10g Release 2 (10.2)

Part Number B14237-02

(DB_FLASHBACK_RETENTION_TARGET)

MetaLink Note:305812.1 Flash Recovery area - Space management Warning & Alerts

MetaLink Note:315098.1 How is the space pressure managed in the Flash Recovery Area - An Example.

RMAN: Full Recovery When the Recovery Catalog and Controlfile are Lost

当数据库使用controlfile存放repository而没有使用catalog的时候,丢失所有的controlfile就等于丢失repository。

前提是有controlfile的自动备份,或者知道手动备份的备份集

对应方法:

1.startup nomount

2.rman target /

3.set dbid=XXXXXXX

(如果不知道dbid,第4步时需要手动在备份集中指定controlfile的备份)

4.restore controlfile from autobackup;

(如果第3步没有设置dbid,restore controlfile from 'backupset_name';)

注意:rman只会搜索windows的%ORACLE_HOME%/database或unix的$ORACLE_HOME/dbs目录

5. sql 'alter database mount'

6.restore database

7.recover database

(如果你的online redo log也损坏了,需要指定until条件)

8.sql 'alter database open resetlogs'

9.重新备份数据库

前提是有controlfile的自动备份,或者知道手动备份的备份集

对应方法:

1.startup nomount

2.rman target /

3.set dbid=XXXXXXX

(如果不知道dbid,第4步时需要手动在备份集中指定controlfile的备份)

4.restore controlfile from autobackup;

(如果第3步没有设置dbid,restore controlfile from 'backupset_name';)

注意:rman只会搜索windows的%ORACLE_HOME%/database或unix的$ORACLE_HOME/dbs目录

5. sql 'alter database mount'

6.restore database

7.recover database

(如果你的online redo log也损坏了,需要指定until条件)

8.sql 'alter database open resetlogs'

9.重新备份数据库

Thursday, October 12, 2006

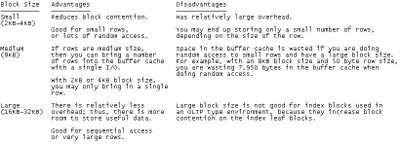

guidelines for db_block_size

Verdict

Verdict~~~~~

Use db_block_size = 2048

only if you really know what you are doing. The best use of 2KB database blocks that I know is in stress tests in which you are trying to drive server workload artificially high so you can analyze the bottlenecks less expensively (i.e., without generating mountains of test data and test transactions).

Use db_block_size = 8192

for most large transactional processing systems. This represents a good balance between advantages and the disadvantage for undo segments.

Use db_block_size of larger than 8KB

for OLTP systems in which your data structures drive the block size to a naturally larger size. This will help to to avoid chained and migrated rows.

Use db_block_size of larger values than 8KB

for systems in which your undo generation is not a meaningful part of your workload. Data warehouses fit this profile. With bigger blocks, you reduce total system I/O setup costs dramatically, yet you incur none of the disadvantages that you would incur in an OLTP system because people generally are not executing transactions (inserts, updates, deletes, and selects for update).

The maximum size of a single index entry is approximately one-half the data block size(8i only).

block size maximum size of a single index entry

(tested on RHEL2.1, oracle8.1.7)

~~~~~~ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

4KB 1578bytes

8KB 3218bytes

16KB 6498bytes

Data block size affacts the size of SGA(8i only)

The size of the SGA is determined by several initialization parameters. The parameters that most affect SGA size are:

DB_BLOCK_SIZE: The size, in bytes, of a single data block and database buffer.

DB_BLOCK_BUFFERS: The number of database buffers, each the size of DB_BLOCK_SIZE, allocated for the SGA. The total amount of space allocated for the database buffer cache in the SGA is DB_BLOCK_SIZE times DB_BLOCK_BUFFERS.

Please make a full and up-to-date backup of your database before export and import

Wednesday, October 11, 2006

changes of data types between 9.2 and 10.2

9.2

char column maximum size: 2000 bytes

long raw column maximum size: 2G

long column maximum size: 2G

raw column maximum size:2000 bytes

blob, clob, nclob maximum size: 4G

bfile maximum size: 4G

10.2

char column maximum size: 2000 bytes

long raw column maximum size: 2G

long column maximum size: 2G

raw column maximum size:2000 bytes

blob, clob, nclob maximum size: 8T~128T

bfile maximum size: 4G

char column maximum size: 2000 bytes

long raw column maximum size: 2G

long column maximum size: 2G

raw column maximum size:2000 bytes

blob, clob, nclob maximum size: 4G

bfile maximum size: 4G

10.2

char column maximum size: 2000 bytes

long raw column maximum size: 2G

long column maximum size: 2G

raw column maximum size:2000 bytes

blob, clob, nclob maximum size: 8T~128T

bfile maximum size: 4G

Database Hangs for DML Activity ORA-16038, ORA-00354, ORA-00312

fact: Oracle Server - Enterprise Edition 8.1.7.4.0

fact: Oracle Server - Enterprise Edition 9.0.1

fact: Oracle Server - Enterprise Edition 9.2

symptom: Database Hangs for DML Activity ORA-16038, ORA-00354, ORA-00312

symptom: Database hangs for dml (insert, update and delete) activity

symptom: ORA-16038: log %s sequence# %s cannot be archived

symptom: ORA-00354: corrupt redo log block header

symptom: ORA-00312: online log %s thread %s: '%s'

cause: Most probable a hardware failure caused an online redolog corruption.

If database is open then try for log number that is corrupted (check from error or v$log):

1 alter database clear logfile group;

When not working (unlikely to succeed) then:

2 alter database clear unarchived logfile group;

If this is successfully, the db is running (not hanging for dml activity anymore) but you miss an archive. This means you have to make a backup first, as long as the backup is not complete you cannot perform media recovery using previous backup as you miss an archive.

3 if 1 and 2 both do not work you will have to perform an incomplete recovery(注1). Shutdown db, restore all datafiles (only datafiles) of a backup and startup mount and issue recover database until cancel and recover until corrupted logfile, then open with alter database open resetlogs; You have dataloss (last corrupted logfile) and you first have to make a new backup after db opens).If the database is closed and cannot be opened you only have option 3.

注1:

以上是metalink提供的解决办法,缺点是情况3时会丢失数据。

实际情况中发生类似3的时候,如果能够正常关闭数据库(immediate),可以先冷备数据库,尝试不用restore,直接recover database until cancel,然后open resetlogs。

这样不会丢失数据。

fact: Oracle Server - Enterprise Edition 9.0.1

fact: Oracle Server - Enterprise Edition 9.2

symptom: Database Hangs for DML Activity ORA-16038, ORA-00354, ORA-00312

symptom: Database hangs for dml (insert, update and delete) activity

symptom: ORA-16038: log %s sequence# %s cannot be archived

symptom: ORA-00354: corrupt redo log block header

symptom: ORA-00312: online log %s thread %s: '%s'

cause: Most probable a hardware failure caused an online redolog corruption.

If database is open then try for log number that is corrupted (check from error or v$log):

1 alter database clear logfile group

When not working (unlikely to succeed) then:

2 alter database clear unarchived logfile group

If this is successfully, the db is running (not hanging for dml activity anymore) but you miss an archive. This means you have to make a backup first, as long as the backup is not complete you cannot perform media recovery using previous backup as you miss an archive.

3 if 1 and 2 both do not work you will have to perform an incomplete recovery(注1). Shutdown db, restore all datafiles (only datafiles) of a backup and startup mount and issue recover database until cancel and recover until corrupted logfile, then open with alter database open resetlogs; You have dataloss (last corrupted logfile) and you first have to make a new backup after db opens).If the database is closed and cannot be opened you only have option 3.

注1:

以上是metalink提供的解决办法,缺点是情况3时会丢失数据。

实际情况中发生类似3的时候,如果能够正常关闭数据库(immediate),可以先冷备数据库,尝试不用restore,直接recover database until cancel,然后open resetlogs。

这样不会丢失数据。

Tuesday, October 10, 2006

Points to Consider for Full Database Exports and Imports

A full database export and import can be a good way to replicate or clean up a database. However, to avoid problems be sure to keep the following points in mind:

1.A full export does not export triggers owned by schema SYS. You must manually re-create SYS triggers either before or after the full import. Oracle recommends that you re-create them after the import in case they define actions that would impede progress of the import.

2.If possible, before beginning, make a physical copy of the exported database and the database into which you intend to import. This ensures that any mistakes are reversible.

3.Before you begin the export, it is advisable to produce a report that includes the following information:

(1)A list of tablespaces and datafiles

(2)A list of rollback segments

(3)A count, by user, of each object type such as tables, indexes, and so on

This information lets you ensure that tablespaces have already been created and that the import was successful

4.If you are creating a completely new database from an export, remember to create an extra rollback segment in SYSTEM and to make it available in your initialization parameter file (init.ora)before proceeding with the import.

5.When you perform the import, ensure you are pointing at the correct instance. This is very important because on some UNIX systems, just the act of entering a subshell can change the database against which an import operation was performed.

6.Do not perform a full import on a system that has more than one database unless you are certain that all tablespaces have already been created. A full import creates any undefined tablespaces using the same datafile names as the exported database. This can result in problems in the following situations:

(1)If the datafiles belong to any other database, they will become corrupted. This is especially true if the exported database is on the same system, because its datafiles will be reused by the database into which you are importing.

(2)

If the datafiles have names that conflict with existing operating system files.

1.A full export does not export triggers owned by schema SYS. You must manually re-create SYS triggers either before or after the full import. Oracle recommends that you re-create them after the import in case they define actions that would impede progress of the import.

2.If possible, before beginning, make a physical copy of the exported database and the database into which you intend to import. This ensures that any mistakes are reversible.

3.Before you begin the export, it is advisable to produce a report that includes the following information:

(1)A list of tablespaces and datafiles

(2)A list of rollback segments

(3)A count, by user, of each object type such as tables, indexes, and so on

This information lets you ensure that tablespaces have already been created and that the import was successful

4.If you are creating a completely new database from an export, remember to create an extra rollback segment in SYSTEM and to make it available in your initialization parameter file (init.ora)before proceeding with the import.

5.When you perform the import, ensure you are pointing at the correct instance. This is very important because on some UNIX systems, just the act of entering a subshell can change the database against which an import operation was performed.

6.Do not perform a full import on a system that has more than one database unless you are certain that all tablespaces have already been created. A full import creates any undefined tablespaces using the same datafile names as the exported database. This can result in problems in the following situations:

(1)If the datafiles belong to any other database, they will become corrupted. This is especially true if the exported database is on the same system, because its datafiles will be reused by the database into which you are importing.

(2)

If the datafiles have names that conflict with existing operating system files.

Removing a Node from a 10g RAC Cluster

Metalink:Note:269320.1

PURPOSE

-------------

The purpose of this note is to provide the user with a document that can be used as a guide to remove a cluster node from an Oracle 10g RealApplications environment.

REMOVING A NODE FROM A 10g RAC CLUSTER

--------------------------------------------------------------

If you have to remove a node from a RAC 10g database, even if the node will no longer be available to the environment, there is a certain amount of cleanup that needs to be done. The remaining nodes need to be informed of the change of status of the departing node.

The most important 3 steps that need to be followed are;

A. Remove the instance using DBCA.

B. Remove the node from the cluster.

C. Reconfigure the OS and remaining hardware.

Here is a breakdown of the above steps.

A. Remove the instance using DBCA.

--------------------------------------

1.Verify that you have a good backup of the OCR (Oracle Configuration Repository) using ocrconfig -showbackup.

2. Run DBCA from one of the nodes you are going to keep. Leave the database up and also leave the departing instance up and running.

3. Choose "Instance Management"

4. Choose "Delete an instance"

5.On the next screen, select the cluster database from which you will delete an instance. Supply the system privilege username and password.

6. On the next screen, a list of cluster database instances will appear. Highlight the instance you would like to delete then click next.

7. If you have services configured, reassign the services. Modify the services so that each service can run on one of the remaining instances. Set "not used" for each service regarding the instance that is to be deleted. Click Finish.

8. If your database is in archive log mode you may encounter the following errors:

ORA-350

ORA-312

This may occur because the DBCA cannot drop the current log, as it needs archiving. This issue is fixed in the 10.1.0.3 patchset. But previous to this patchset you should click the ignore button and when the DBCA completes, manually archive the logs for the deleted instance and dropt the log group.

SQL> alter system archive log all;

SQL> alter database drop logfile group 2;

9. Verify that the dropped instance's redo thread has been removed by querying v$log. If for any reason the redo thread is not disabled then disable the thread.

SQL> alter database disable public thread 2;

10.Verify that the instance was removed from the OCR (Oracle Configuration Repository) with the following commands:

srvctl config database -d

$ORA_CRS_HOME/bin/./crs_stat

11. If this node had an ASM instance and the node will no longer be a part of the cluster you will now need to remove the ASM instance with:

srvctl stop asm -n

srvctl remove asm -n

Verify that asm is removed with:

srvctl config asm -n

B. Remove the Node from the Cluster

---------------------------------------------

Once the instance has been deleted. The process of removing the node from the cluster is a manual process. This is accomplished by running scripts on the deleted node to remove the CRS install, as well as scripts on the remaining nodes to update the node list. The following steps assume that the node to be removed is still functioning.

1.First as the root user determine the node name and node number on each node as stored in the Cluster Registry.

From the CRS_HOME/bin

olsnodes -n

1

2

2. To delete node number 2 first stop and remove the nodeapps on the node you are removing. Assuming that you have removed the ASM instance as the root user on a remaining node;

srvctl stop nodeapps -n

3.Run NETCA. Choose "Cluster Configuration".

4. Only select the node you are removing and click next.

5. Choose "Listener Configuration" and click next.

6. Choose "Delete" and delete any listeners configured on the node you are removing.

7. Run $ORA_CRS_HOME/bin/crs_stat. Make sure that all database resources are running on nodes that are going to be kept.

For example:

NAME=ora..db

TYPE=application

TARGET=ONLINE

STATE=ONLINE on

Ensure that this resource is not running on a node that will be removed. Use $ORA_CRS_HOME/bin/crs_relocate to perform this.

Example:

crs_relocate ora..db

8. As the root user, remove the nodeapps on the node you are removing.

srvctl remove nodeapps -n

9. Next as the Oracle user run the installer with the updateNodeList option on the node you are deleting.

a. DISPLAY=ipaddress:0.0; export DISPLAY

This should be set even though the gui does not run.

b. $ORACLE_HOME/oui/bin/runInstaller -updateNodeList ORACLE_HOME=/u01/app/oracle/10g CLUSTER_NODES=, ,

This should be run as one command. With this command we are defining the nodes that now are part of the cluster.

10. Change to the root user to finish the removal on the node that is being removed(在准备删除的节点上运行). This command will stop the CRS stack and delete the ocr.loc file on the node to be removed. The nosharedvar option assumes the ocr.loc file is not on a shared file sytem. If it does exist on a shared file system then specify sharedvar instead. Run the rootdelete.sh script from $ORA_CRS_HOME/install.

Example:

$ORA_CRS_HOME/install/./rootdelete.sh remote nosharedvar

11. On a node that will be kept, the root user should run the rootdeletenode.sh script from the $ORA_CRS_HOME/install directory. When running this script from the CRS home specify both the node name and the node number. The node name and the node number are visiable in olsnodes -n. Also do NOT put a space after the comma between the two.

olsnodes -n

1

2

$ORA_CRS_HOME/install> ./.rootdeletenode.sh,2

12. Confirm success by running OLSNODES.

$ORA_CRS_HOME/bin>: ./olsnodes -n

1

13. Now switch back to the oracle user account and run the same runInstaller command as before. Run it this time from the ORA_CRS_HOME instead of the ORACLE_HOME. Specify all of the remaining nodes.

a. DISPLAY=ipaddress:0.0; export DISPLAY

b. $ORA_CRS_HOME/oui/bin/runInstaller -updateNodeList ORACLE_HOME= CLUSTER_NODES=, , CRS=TRUE

14. Once the node updates are done you will need to manually delete the $ORACLE_HOME and $CRS_HOME from the node to be expunged, unless, of course, either of these is on a shared file system that is still being used.

a. $ORACLE_HOME>: rm -rf *

b. $CRS_HOME> : rm -rf * (as root)

15. Next, as root, from the deleted node, verify that all init scripts and soft links are removed:

Sun:

rm /etc/init.d/init.cssd

rm /etc/init.d/init.crs

rm /etc/init.d/init.crsd

rm /etc/init.d/init.evmd

rm /etc/rc3.d/K96init.crs

rm /etc/rc3.d/S96init.crs

rm -Rf /var/opt/oracle/scls_scr

rm -Rf /var/opt/oracle/oprocd

Linux:

rm -f /etc/init.d/init.cssd

rm -f /etc/init.d/init.crs

rm -f /etc/init.d/init.crsd

rm -f /etc/init.d/init.evmd

rm -f /etc/rc2.d/K96init.crs

rm -f /etc/rc2.d/S96init.crs

rm -f /etc/rc3.d/K96init.crs

rm -f /etc/rc3.d/S96init.crs

rm -f /etc/rc5.d/K96init.crs

rm -f /etc/rc5.d/S96init.crs

rm -Rf /etc/oracle/scls_scr

HP-UX:

rm /sbin/init.d/init.cssd

rm /sbin/init.d/init.crs

rm /sbin/init.d/init.crsd

rm /sbin/init.d/init.evmd

rm /sbin/rc3.d/K960init.crs

rm /sbin/rc3.d/S960init.crs

rm /sbin/rc2.d/K960init.crs

rm /sbin/rc2.d/K001init.crs

rm -Rf /var/opt/oracle/scls_scr

rm -Rf /var/opt/oracle/oprocd

HP Tru64:

rm /sbin/init.d/init.cssd

rm /sbin/init.d/init.crs

rm /sbin/init.d/init.crsd

rm /sbin/init.d/init.evmd

rm /sbin/rc3.d/K96init.crs

rm /sbin/rc3.d/S96init.crs

rm -Rf /var/opt/oracle/scls_scr

rm -Rf /var/opt/oracle/oprocd

IBM AIX:

rm /etc/init.cssd

rm /etc/init.crs

rm /etc/init.crsd

rm /etc/init.evmd

rm /etc/rc.d/rc2.d/K96init.crs

rm /etc/rc.d/rc2.d/S96init.crs

rm -Rf /etc/oracle/scls_scr

rm -Rf /etc/oracle/oprocd

16. You can also remove the /etc/oracle directory, the /etc/oratab file, and the Oracle inventory (if desired)

17.To remove an ADDITIONAL ORACLE_HOME or EM_HOME from the inventory on all remaining nodes, run the installer to update the node list. Example (if removing node 2):

runInstaller -updateNodeList -local ORACLE_HOME=$ORACLE_HOME CLUSTER_NODES=node1,node3,node4

PURPOSE

-------------

The purpose of this note is to provide the user with a document that can be used as a guide to remove a cluster node from an Oracle 10g RealApplications environment.

REMOVING A NODE FROM A 10g RAC CLUSTER

--------------------------------------------------------------

If you have to remove a node from a RAC 10g database, even if the node will no longer be available to the environment, there is a certain amount of cleanup that needs to be done. The remaining nodes need to be informed of the change of status of the departing node.

The most important 3 steps that need to be followed are;

A. Remove the instance using DBCA.

B. Remove the node from the cluster.

C. Reconfigure the OS and remaining hardware.

Here is a breakdown of the above steps.

A. Remove the instance using DBCA.

--------------------------------------

1.Verify that you have a good backup of the OCR (Oracle Configuration Repository) using ocrconfig -showbackup.

2. Run DBCA from one of the nodes you are going to keep. Leave the database up and also leave the departing instance up and running.

3. Choose "Instance Management"

4. Choose "Delete an instance"

5.On the next screen, select the cluster database from which you will delete an instance. Supply the system privilege username and password.

6. On the next screen, a list of cluster database instances will appear. Highlight the instance you would like to delete then click next.

7. If you have services configured, reassign the services. Modify the services so that each service can run on one of the remaining instances. Set "not used" for each service regarding the instance that is to be deleted. Click Finish.

8. If your database is in archive log mode you may encounter the following errors:

ORA-350

ORA-312

This may occur because the DBCA cannot drop the current log, as it needs archiving. This issue is fixed in the 10.1.0.3 patchset. But previous to this patchset you should click the ignore button and when the DBCA completes, manually archive the logs for the deleted instance and dropt the log group.

SQL> alter system archive log all;

SQL> alter database drop logfile group 2;

9. Verify that the dropped instance's redo thread has been removed by querying v$log. If for any reason the redo thread is not disabled then disable the thread.

SQL> alter database disable public thread 2;

10.Verify that the instance was removed from the OCR (Oracle Configuration Repository) with the following commands:

srvctl config database -d

$ORA_CRS_HOME/bin/./crs_stat

11. If this node had an ASM instance and the node will no longer be a part of the cluster you will now need to remove the ASM instance with:

srvctl stop asm -n

srvctl remove asm -n

Verify that asm is removed with:

srvctl config asm -n

B. Remove the Node from the Cluster

---------------------------------------------

Once the instance has been deleted. The process of removing the node from the cluster is a manual process. This is accomplished by running scripts on the deleted node to remove the CRS install, as well as scripts on the remaining nodes to update the node list. The following steps assume that the node to be removed is still functioning.

1.First as the root user determine the node name and node number on each node as stored in the Cluster Registry.

From the CRS_HOME/bin

olsnodes -n

2. To delete node number 2 first stop and remove the nodeapps on the node you are removing. Assuming that you have removed the ASM instance as the root user on a remaining node;

srvctl stop nodeapps -n

3.Run NETCA. Choose "Cluster Configuration".

4. Only select the node you are removing and click next.

5. Choose "Listener Configuration" and click next.

6. Choose "Delete" and delete any listeners configured on the node you are removing.

7. Run $ORA_CRS_HOME/bin/crs_stat. Make sure that all database resources are running on nodes that are going to be kept.

For example:

NAME=ora.

TYPE=application

TARGET=ONLINE

STATE=ONLINE on

Ensure that this resource is not running on a node that will be removed. Use $ORA_CRS_HOME/bin/crs_relocate to perform this.

Example:

crs_relocate ora.

8. As the root user, remove the nodeapps on the node you are removing.

srvctl remove nodeapps -n

9. Next as the Oracle user run the installer with the updateNodeList option on the node you are deleting.

a. DISPLAY=ipaddress:0.0; export DISPLAY

This should be set even though the gui does not run.

b. $ORACLE_HOME/oui/bin/runInstaller -updateNodeList ORACLE_HOME=/u01/app/oracle/10g CLUSTER_NODES=

This should be run as one command. With this command we are defining the nodes that now are part of the cluster.

10. Change to the root user to finish the removal on the node that is being removed(在准备删除的节点上运行). This command will stop the CRS stack and delete the ocr.loc file on the node to be removed. The nosharedvar option assumes the ocr.loc file is not on a shared file sytem. If it does exist on a shared file system then specify sharedvar instead. Run the rootdelete.sh script from $ORA_CRS_HOME/install.

Example:

$ORA_CRS_HOME/install/./rootdelete.sh remote nosharedvar

11. On a node that will be kept, the root user should run the rootdeletenode.sh script from the $ORA_CRS_HOME/install directory. When running this script from the CRS home specify both the node name and the node number. The node name and the node number are visiable in olsnodes -n. Also do NOT put a space after the comma between the two.

olsnodes -n

$ORA_CRS_HOME/install> ./.rootdeletenode.sh

12. Confirm success by running OLSNODES.

$ORA_CRS_HOME/bin>: ./olsnodes -n

13. Now switch back to the oracle user account and run the same runInstaller command as before. Run it this time from the ORA_CRS_HOME instead of the ORACLE_HOME. Specify all of the remaining nodes.

a. DISPLAY=ipaddress:0.0; export DISPLAY

b. $ORA_CRS_HOME/oui/bin/runInstaller -updateNodeList ORACLE_HOME=

14. Once the node updates are done you will need to manually delete the $ORACLE_HOME and $CRS_HOME from the node to be expunged, unless, of course, either of these is on a shared file system that is still being used.

a. $ORACLE_HOME>: rm -rf *

b. $CRS_HOME> : rm -rf * (as root)

15. Next, as root, from the deleted node, verify that all init scripts and soft links are removed:

Sun:

rm /etc/init.d/init.cssd

rm /etc/init.d/init.crs

rm /etc/init.d/init.crsd

rm /etc/init.d/init.evmd

rm /etc/rc3.d/K96init.crs

rm /etc/rc3.d/S96init.crs

rm -Rf /var/opt/oracle/scls_scr

rm -Rf /var/opt/oracle/oprocd

Linux:

rm -f /etc/init.d/init.cssd

rm -f /etc/init.d/init.crs

rm -f /etc/init.d/init.crsd

rm -f /etc/init.d/init.evmd

rm -f /etc/rc2.d/K96init.crs

rm -f /etc/rc2.d/S96init.crs

rm -f /etc/rc3.d/K96init.crs

rm -f /etc/rc3.d/S96init.crs

rm -f /etc/rc5.d/K96init.crs

rm -f /etc/rc5.d/S96init.crs

rm -Rf /etc/oracle/scls_scr

HP-UX:

rm /sbin/init.d/init.cssd

rm /sbin/init.d/init.crs

rm /sbin/init.d/init.crsd

rm /sbin/init.d/init.evmd

rm /sbin/rc3.d/K960init.crs

rm /sbin/rc3.d/S960init.crs

rm /sbin/rc2.d/K960init.crs

rm /sbin/rc2.d/K001init.crs

rm -Rf /var/opt/oracle/scls_scr

rm -Rf /var/opt/oracle/oprocd

HP Tru64:

rm /sbin/init.d/init.cssd

rm /sbin/init.d/init.crs

rm /sbin/init.d/init.crsd

rm /sbin/init.d/init.evmd

rm /sbin/rc3.d/K96init.crs

rm /sbin/rc3.d/S96init.crs

rm -Rf /var/opt/oracle/scls_scr

rm -Rf /var/opt/oracle/oprocd

IBM AIX:

rm /etc/init.cssd

rm /etc/init.crs

rm /etc/init.crsd

rm /etc/init.evmd

rm /etc/rc.d/rc2.d/K96init.crs

rm /etc/rc.d/rc2.d/S96init.crs

rm -Rf /etc/oracle/scls_scr

rm -Rf /etc/oracle/oprocd

16. You can also remove the /etc/oracle directory, the /etc/oratab file, and the Oracle inventory (if desired)

17.To remove an ADDITIONAL ORACLE_HOME or EM_HOME from the inventory on all remaining nodes, run the installer to update the node list. Example (if removing node 2):

runInstaller -updateNodeList -local ORACLE_HOME=$ORACLE_HOME CLUSTER_NODES=node1,node3,node4

Saturday, October 07, 2006

the way to know which transaction is using a large number of undo space

select used_ublk from v$transaction order by used_ublk desc;

Subscribe to:

Posts (Atom)